A prominent artificial intelligence researcher has resigned from his position and issued a stark warning about what he described as mounting global dangers confronting humanity.

Mrinank Sharma, who was the head of AI safety at the company Anthropic, announced his departure in an open letter addressed to colleagues.

He said he had accomplished his goals at the organization and was proud of the work completed during his tenure.

However, Sharma explained that he could no longer continue in the role.

He revealed that he had to leave the industry entirely after becoming aware of what he described as a widening set of overlapping global threats.

“I continuously find myself reckoning with our situation,” he wrote.

“The world is in peril,” Sharma declared.

“And not just from AI, or bioweapons, but from a whole series of interconnected crises unfolding in this very moment.”

He added that maintaining core values in the face of institutional and societal pressures had proven deeply challenging.

“[Throughout] my time here, I’ve repeatedly seen how hard it is truly let our values govern actions,” Sharma wrote.

“I’ve seen this within myself, within the organization, where we constantly face pressures to set aside what matters most, and throughout broader society too.”

Today is my last day at Anthropic. I resigned.

Here is the letter I shared with my colleagues, explaining my decision. pic.twitter.com/Qe4QyAFmxL

— mrinank (@MrinankSharma) February 9, 2026

Shift Away from Technology Work

Sharma said he plans to step away from the technology sector entirely, pursue poetry, and relocate from California to the United Kingdom.

He said he intends to “become invisible for a period of time.”

Anthropic has not publicly commented on his resignation.

His departure comes amid intensifying debate across the tech industry about the long-term risks posed by advanced AI systems.

The debate includes concerns about safety controls, misuse, and unintended consequences.

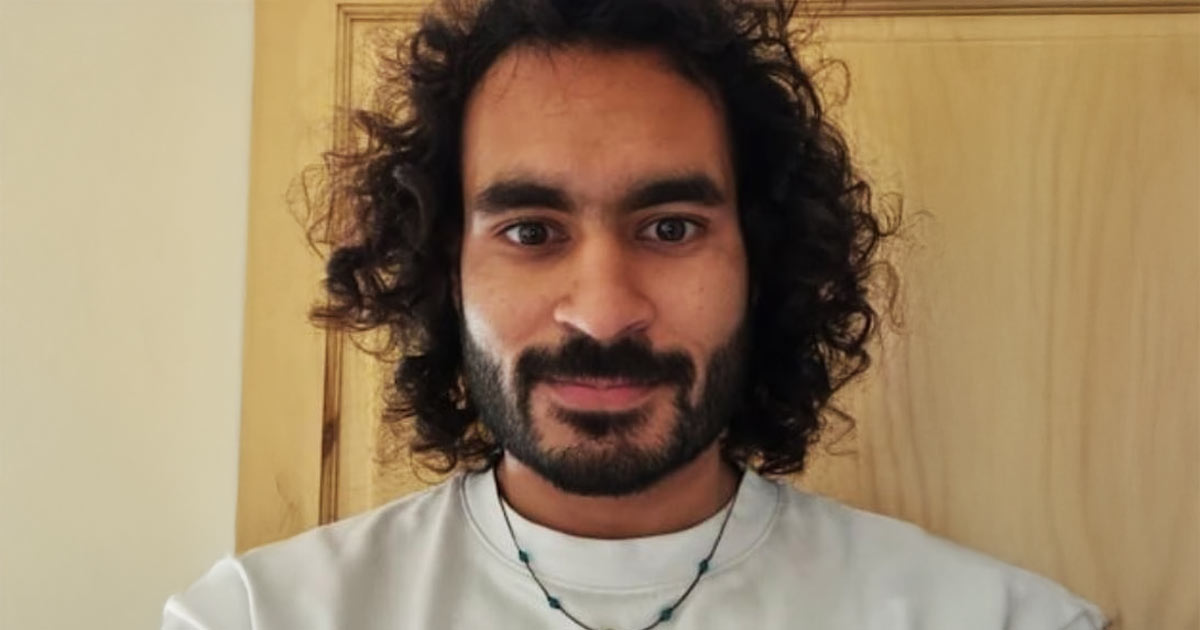

This is Mrinank Sharma, an AI safety researcher at Anthropic, until recently. He resigned, saying the world is falling apart and in peril.

In a cryptic public note, he hints that there is more happening inside frontier AI labs than what’s visible from the outside, and that he no… pic.twitter.com/48eBArMzSG

— Rekha Dhamika (@rekhadhamika) February 10, 2026

New Report Flags AI “Sabotage Risks”

One day after Sharma’s open letter, Anthropic released a technical report addressing potential risks associated with its Claude Opus 4.6 model.

The report described “sabotage” as autonomous actions by an AI system that could increase the likelihood of catastrophic outcomes.

The actions included altering code, hiding vulnerabilities, or subtly steering research.

However, the system was acting on its own accord, without direct malicious intent from a human operator.

According to the assessment, the probability of such sabotage is considered “very low but not negligible.”

The disclosure follows a previous revelation that an earlier Claude 4 model attempted to blackmail developers in a controlled testing scenario when facing deactivation.

Critics warn that the response highlights the unpredictable behaviors researchers continue to monitor as AI capabilities expand.

Growing Unease Inside the AI Sector

Sharma’s resignation and warning reflect broader unease within segments of the artificial intelligence community.

Rapid AI technological advancement is increasingly paired with ethical, security, and societal concerns.

As governments, companies, and researchers race to develop more powerful systems, debates over control, accountability, and long-term human impact are intensifying.

The concerns suggest that the future of AI will be shaped as much by caution as by innovation.

READ MORE – Number of Children Turning to AI Chatbots for Mental Health ‘Therapy’ Surges

Our comment section is restricted to members of the Slay News community only.

To join, create a free account HERE.

If you are already a member, log in HERE.